Earthquakes, volcanic eruptions, floods and wildfires are formidable natural phenomena that require rapid but strategic responses to prevent significant losses to life as well as property. Earth satellite data can enable responders to identify the location, cause and severity of impacts, including property damage. However, these natural disasters often impact large areas, and the manual process of searching through extensive imagery to pinpoint the location of and assess the amount of damage is slow and labor-intensive. Trained analysts who examine the images have to integrate their knowledge about an area’s geography, as well as the specific disaster’s conditions to score building damage. How can this process be sped up to bring accurate information to disaster responders more quickly?

NASA Earth Applied Sciences’ Open Innovation and Disasters program area joined the Defense Innovation Unit (DIU), part of the Department of Defense, to address just this problem, and they invited the expertise of one key group – the public! DIU’s xView2 Challenge invited image analysts and computer vision experts to participate in an open, international prize competition to create machine learning algorithms that could process pre- and post-natural disaster imagery to assess building damage.

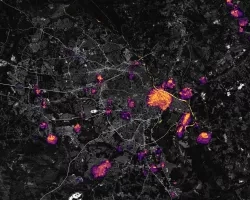

The challenge was supported by a large dataset, xBD, which consists of 850,000 building annotations across more than 45,000 km2 of imagery spanning 10+ countries and six different disaster types. Participants were given a baseline machine learning model created by Carnegie Mellon University’s Software Engineering Institute (CMU SEI) to begin their algorithm development, but were also free to create their own path to automatically classify building damage in the curated satellite imagery.

U.S. and international participants, including 505 different teams from various colleges and universities, submitted more than 2,000 solutions against the xView2 public leaderboard. The top three winning solutions were able to accurately detect locations of damaged buildings, scoring them as undamaged, having minor or major damage, or destroyed, over 80% of the time. The submissions of these winning solutions can be found at the resource website for the project, DIUx-x View.

Plans to apply the winning models for damage assessment are already underway. DIU and CMU SEI are working with the California Air National Guard to deploy the top xView2 algorithms for the California fire season this year. The models have also been shared with the Australian Ministry of Defense to assist with their bushfire firefighting efforts.

The Applied Sciences Program’s partnership with DIU for the xView2 Challenge also spurred further collaboration between the two programs.

NASA’s Sang-Ho Yun, a Disaster program principal investigator and Disaster Response Lead of the Advanced Rapid Imaging and Analysis (ARIA) Project at NASA's Jet Propulsion Laboratory in Southern California, is collaborating on disaster response projects with Nirav Patel and Bryce Goodman at DIU and Ritwik Gupta at CMU SEI and DIU. Together, the programs are developing strategies for collecting synthetic aperture radar (SAR) and electro-optical remote sensing data to help national and international response to natural hazards as well as other global events, such as the ongoing COVID-19 pandemic. Using SAR imagery will enable monitoring human activity and understanding patterns of life changes, which may have significant implications in assisting decision-making during global crisis response.

And challenge enthusiasts – there’s more good news for you as well!

The Open Innovation and Disasters programs plan to team up with the DIU again to develop the next iteration of the xView challenge (xView3). This is expected to be a SAR version of xView2, allowing participants to use satellite SAR observations and develop machine learning algorithms to support humanitarian assistance for disaster response and recovery.

More about this DIU challenge can be found at the web posting, A Challenge to Automatically Assess Damaged Buildings After a Disaster.